Prelaunch Checklist

” This website is built on Astro, therefore this post will be rocket themed.

I’m Stew and I will be your mission commander today through this mission through the Cloud Resume Challenge, as created by Forrest Brazeal. I bring to this mission over a decade of experience as an entrepreneur and a business executive. My journey began just over 10 years ago, where at 19 I started my company Stew’s Self Service Garage – an auto mechanic’s shop that the public can use to work on their own car themselves. We provide all the tools, equipment, and even some expert advice so you can come in and fix your car yourself. Think of it like a professional mechanic’s shop that you get to work on your car in. Now you may be thinking to yourself, shouldn’t this post have a car theme to it instead? Technically yes, but this website is built on Astro, therefore this post will be rocket themed.

After conceptualizing my business, bringing it to market, operating it, refining the business model, growing the business, and eventually expanding to multiple locations, it’s time for a new challenge. At the end of Summer 2023, I was speaking with a former AWS executive about where I might take my career next, and he suggested I investigate cloud architecture. My existing skill sets in business could come in handy architecting cloud solutions, and I’ve always been interested in cloud technology.

Eventually on my journey I ran across the Cloud Resume Challenge, a 16-step challenge designed to demonstrate DevOps ability in the cloud. It seemed like a great way to demonstrate my newly acquired skills since I wanted to make a personal website anyway. After reading through the challenge, I took note that each phase of the challenge had several ‘mods’ suggested by Forrest. Each phase would have an optional devops, developer, and security themed modification to the challenge. I implemented 9 out of the 11 listed mods in the challenge because I found so many of them applicable to real world use cases. I personally created a couple of my own mods, which I’ll get to below. Each section below is titled in the sequence of getting a rocket into space and will have the list of requirements being met by the challenge.

Hey mission specialist, yes you, we need to take our seats in mission control! We are done with the prelaunch checklist and it’s time for ignition.

Ignition

Challenge requirements: Certification, HTML/CSS Website

All prelaunch checklist items are completed, let’s start the ignition cycle. This starts with getting certified in AWS, which is the first piece I applied my own mod to. The challenge requires earning the Certified Cloud Practitioner certificate. I applied my own mod, achieving both Solutions Architect Associate and Professional certificates. I won’t get into too much detail here because I felt like the AWS certification process was worthy of its own post. I enjoy writing blog posts, and to not clog this one up, here’s a separate post about my journey to complete hundreds of hours of study to earn three AWS certifications on the first try.

With certificates in hand, it was time to create the website. The first official mod in the challenge is one of the easiest mods to apply, which is to use a static site generator (SSG) to make the website instead of a website that is just an HTML page with some CSS to style it. Forrest suggested Hugo, but research into Hug kept pointing me to Astro. I liked what I found with Astro, deciding to use this instead. Astro is like an SSG, but exceptionally powerful in that it provides selective server-side rendering (SSR) capabilities. This was another topic that I wrote another post about, which you can read here. I go into what Astro is, my learning process, how I built the website, and a few other tricks up my sleeve. Hint, we are in the year 2024 and everyone is non-stop talking about AI.

Engine systems are looking good. mission specialist, are you reading all systems go? Confirmed, all systems go, we have ignition. Prepare for liftoff.

Liftoff

Challenge requirements: JavaScript, Python, Database, Tests, Source Control

We have liftoff! And with that, the website was now in a functional state with most of the content on the website. Next on the list was writing the view counter and creating the backend code associated with it. Once again, there were mods to the challenge here, one of which was to create a unique visitor counter instead of a simple view counter. This would require both frontend and backend to make it work properly. This is generally the hardest part of the challenge because you must make a component do something on your website that involves using both frontend and backend. Most people save it for last, but because of how I’m going about building the website it made sense to do it in the middle of the challenge.

Some of this part of the challenge was covered in my post about creating the Astro website itself, which if you already read it you can skip to the next paragraph. It describes creating a functioning visit counter in a blank JS project in a separate environment. This way I wouldn’t have to worry about framework issues while I was figuring out my JS code. I augmented my own JS with some publicly available snippets as well as leveraging AI tools to get functioning code. From there I brought it into Astro, creating a React component by translating my JS code to React with Copilot. The backend code was translated from JS to Python by Copilot, and then converted into a lambda handler.

With both frontend code and backend code, I needed to test once more to make sure it was working before I got into hosting things in AWS. With Python you can create unit tests and mock AWS services with it. The frontend connects to the backend via AWS API Gateway, that triggers a lambda function, that gets the data from DynamoDB, processes that data, updates the database, and serves a JSON response back to the frontend via API Gateway. Python unit tests have a dependency you can install called mock, which does exactly as described. It mocks AWS services for your code in the unit test. With some prompt engineering I had a unit test for my backend code, creating a pass based on receiving expected values for both the database write as well as the JSON response. I created a GitHub repository for the backend, and an action to perform a build action.

For the front end code I wrote a Playwright test that launched a browser to test the counter functionality. First it confirmed the unique view count was greater than 0, and then printed the value every second for 5 seconds to the console. Next it intercepted the GET request, denied the response, and confirmed the visit counter showed 0. I coded the default to show 0, that way if the GET failed or it took a long time to load at least there was something there. With the front end also passing its tests, it was ready for stage separation - also known as hosting everything in the cloud.

First things first though, I wanted to put the backend into the cloud, and get an API Gateway URL to confirm functionality with my website hosted locally. I was familiar with CORS from the AWS courses, and I know that it can create issues with API requests. I would execute some click-ops getting the backend services all setup and the necessary backend code hosted there. But I immediately experienced an IAM role permissions error where the API gateway didn’t have permission to the lambda function. With that fixed, I ran into the dreaded CORS issue. When setting up the API gateway for REST API there is a very deceptive check box for CORS that enables the ‘OPTIONS’ request. Instead of using GET, PUT, etc., it uses OPTIONS. Without getting into the details here, that wasn’t going to work with my frontend code, and I was still getting a CORS error in the browser. After some research, I found the solution was to specify a GET request, and that would show an Enable CORS button in API gateway. Once that was done, I no longer received CORS errors in the browser and the unique visitor counter officially showed 1 visitor. A quick check in the DynamoDB table and I could see that it also had the correct data. Success!

Specialist, stage detachment check. Confirmed ready? Prepare for stage separation.

Stage Separation

Challenge requirements: Source Control, Hosting, DNS, HTTPS

Challenge requirements: Source Control, Hosting, DNS, HTTPS

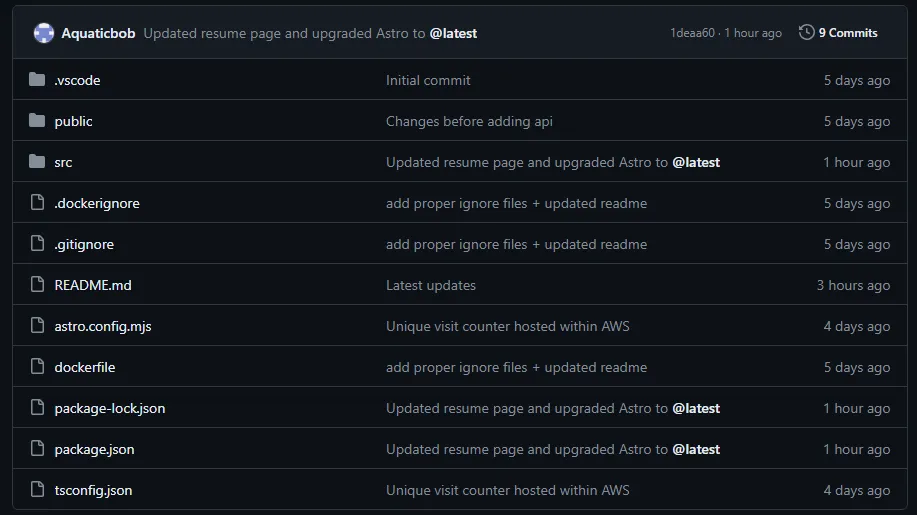

Stage separation confirmed, all systems nominal. Meaning all tests passed, and we are ready to load the frontend into AWS via click-ops. Going back to the requirements, this is where another one of my personal mods comes into play. I think static hosting in S3 makes a lot of sense, however I also know that containers are a huge component to an organization’s deployment strategy. This meant putting my website in a container would likely be a better demonstration of skill. I also have some server-side rendering to do, and S3 just isn’t going to work for that. I setup a repository for the frontend code on my GitHub to take advantage of source control and prepare for frontend CI/CD.

While I plan to learn Kubernetes (K8s), I don’t know it well enough to be comfortable deploying with K8s for the moment. I still want to use a container, so ECS will be my choice for hosting the frontend. Conveniently Astro provides a docker file example, which I made a couple optimizations to for my site. Making a quick .dockerignore file, and I was ready to create the image. With the image built by docker, I pushed it to AWS ECR. Now the image would be ready for hosting on ECS when I was ready to launch the service. But there were still a few more things to get done before I’d be ready for that stage.

I needed a domain name, and not just any name would do! Branding is a key part of any business strategy, so why not for me personally as well? That is where JustStewIt was born, and it was quickly purchased as my domain name. Mod alert! Yes, this part also contains a mod from Forrest to provide DNSSEC to prevent man in the middle attacks. Setting up DNSSEC with Route53 (R53) and AWS Certificate Manager (ACM) is straight forward, so I wasn’t about to pass up an easy mod. Now that the DNS was ready to go, I also made a certificate for CloudFront as well as for HTTPS.

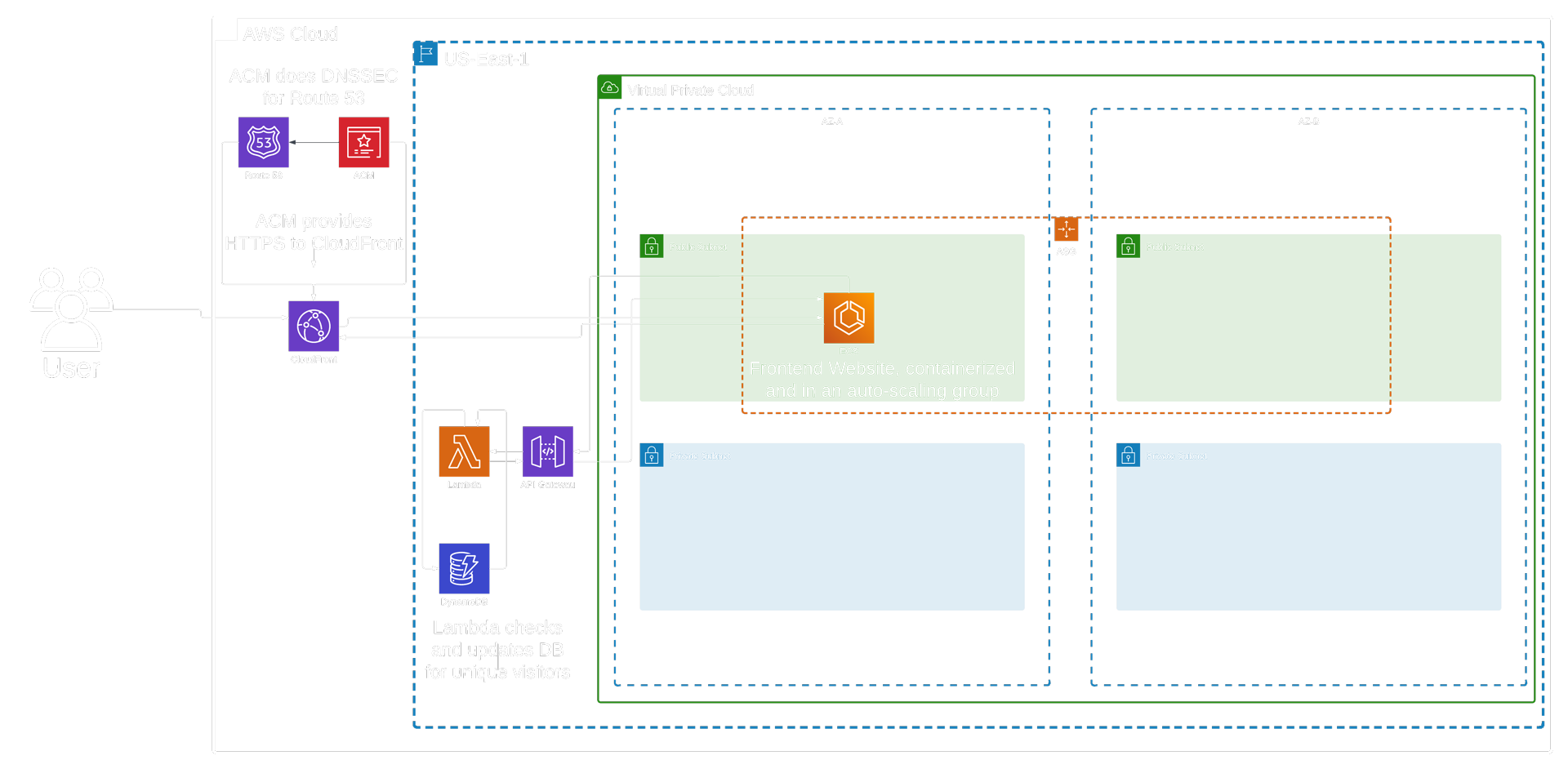

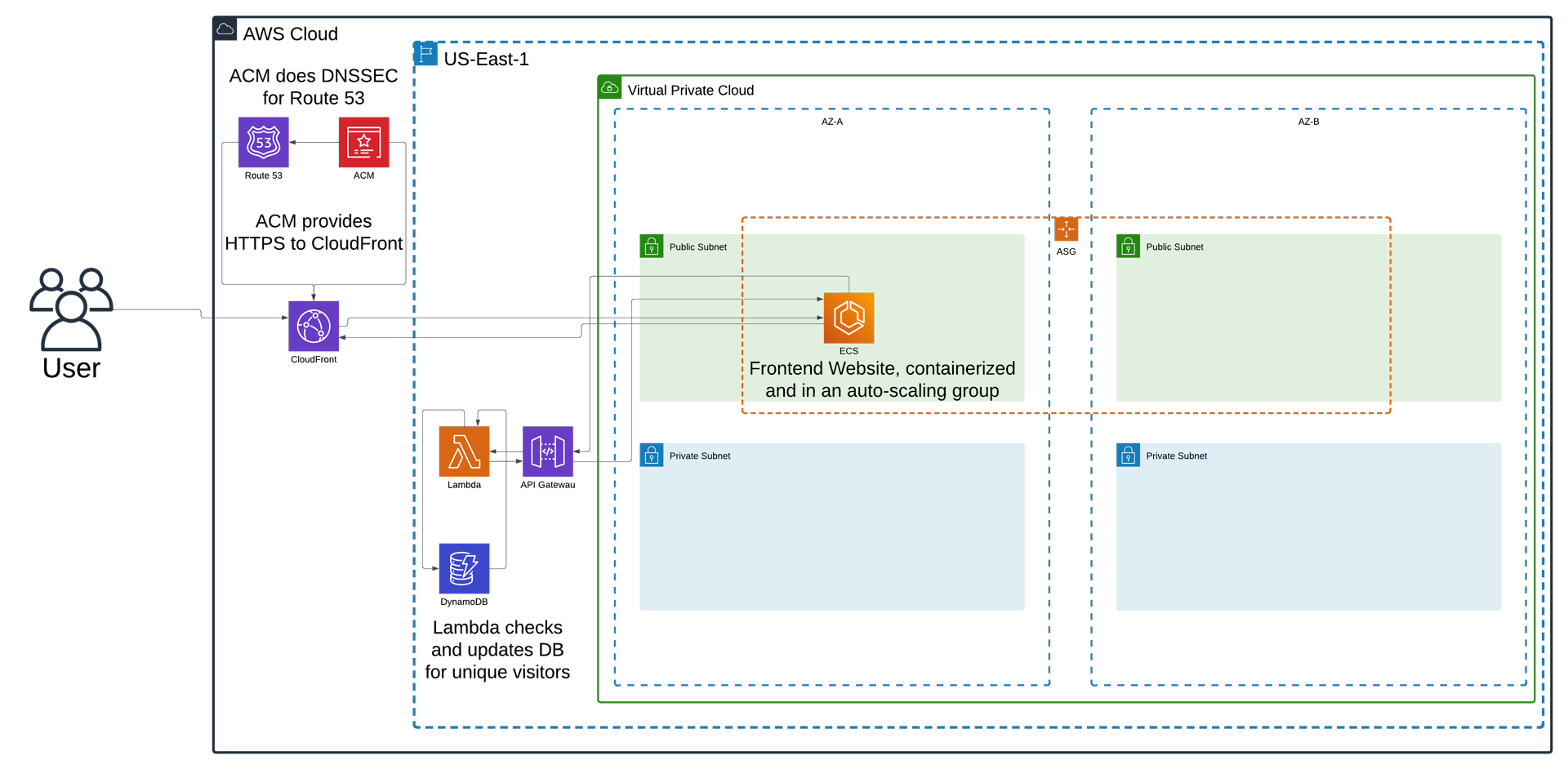

Because I wanted to utilize free tier benefits as much as possible, I opted for ECS with EC2 hosting because this website would run 24/7/365. I don’t expect the website to go viral, but in case it does I want to be ready. This means creating a VPC and utilizing subnets in multiple availability zones (AZ). This will allow me to take advantage of high availability in case of AZ failure. After setting up the routing for the VPC, it was time to create the ECS cluster. Next up was creating the task definition and launching the service. One key thing was making sure the containers could scale out on the machine, as well as make sure replication was on for updates. Now there was the ECS cluster with the service running behind an application load balancer. My goals were to have as little downtime as possible, as well as scalability.

I now have a fully running cloud hosted website with both frontend and backend utilizing API to communicate to each other. All that was left was to set up HTTPS and the DNS for CloudFront. I created my CloudFront distribution, making sure to add the alternate domain, and setting up the cache behaviors for both the dynamic and state content of the website. In doing so I created a new certificate for it through AWS’ certificate manager which would allow HTTPS connections. For my DNS name for CloudFront all I needed to do was create an alias record to CloudFront in route53. After some DNS propagation the website was live being served through CloudFront, to an ALB origin. It’s been an adventure getting to this point, but it’s been very rewarding seeing it function. There were only a couple things left to do to complete the challenge as well as all but 2 of the mods.

All stations standby for burn sequence initiation. Specialist, give me a T-minus from 10.

Orbit Entry

Challenge requirements: IAC, CI/CD frontend, CI/CD backend

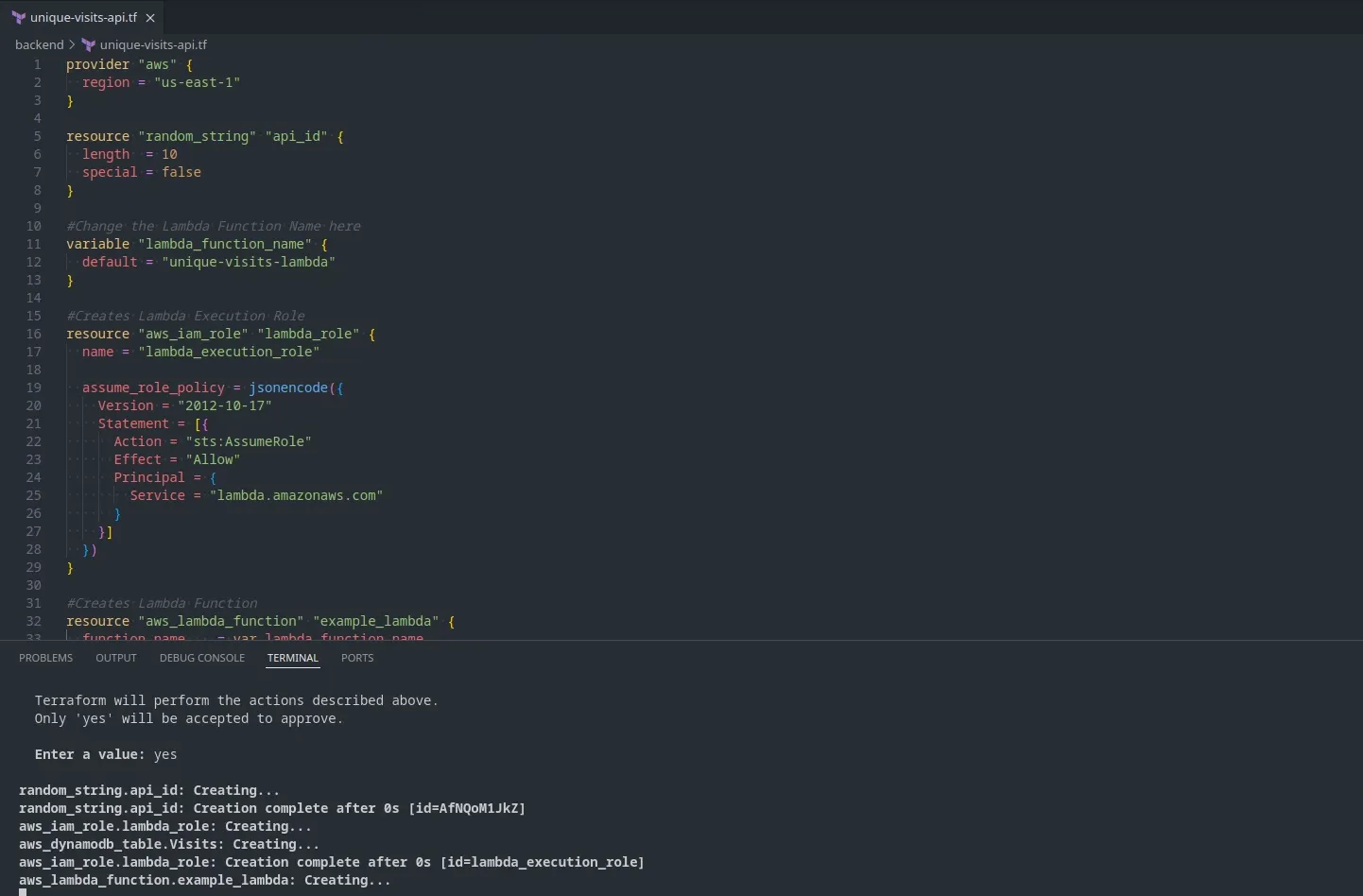

Orbit entry is a success. We are officially in the cloud! There is a frontend which runs on ECS served through CloudFront, and there is a backend which powers the unique visitors by using API gateway, lambda, and DynamoDB. The backend is fully created through Terraform, which you can see some of the file up above in the picture. Being able to launch and tear down services with a few console commands makes for a very easy process. The lambda code is also containerized, this means if I need to make code updates, I just push a new container through it. This is also handled through GitHub actions in a similar fashion to serving the frontend container. For the front end, whenever I update the website, I simply push to the staging branch of my repo, and the actions take it from there. If the tests pass it merges with the main branch, pushes the image to ECR, and it runs the update ECS task command.

AWS provides excellent documentation on running containerized services for both lambda as well as ECS. Spending a little bit of time running through the documentation gave me exactly what I needed to develop the images for each service, as well as utilize ECR properly. I’m a big believer in reading documentation - it’s there to help you leverage a service properly. As a quick aside, I’ve always been like this. When I was about 11, my dad bought a boat for us to enjoy during the summers. I knew nothing about boats, so he bought the Coast Guard book for operating freshwater vessels. This included all the regulations, as well as how to’s for everything. This book was thiccc (with 3 c’s), and I read it cover to cover and became the go-to person for everything on the boat. I knew all the regulations, all the proper procedures, and with some practice on the boat I was well prepared for most scenarios. I carry the same approach to everything now, reading the documentation and trying to become an expert.

Now with AI, this task has become even easier. I can pair reading documentation with leveraging AI tools to learn faster. It also facilitates using AI to complete tasks for me, such as generating a lot of code that was used in this website and functional code being used in other projects I have done. It helped me create my GitHub actions for both the front end and back end, allowing me to get my CI/CD pipeline integrated very quickly and with relatively little pain. I’m new to a lot of this level of coding, but AI has helped me along the way. Disclaimer: I understand that publicly available AI such as ChatGPT and copilot are not secure tools. I never input proprietary information into these tools. I am very mindful of data and intellectual property security.

All stations prepare for controlled flight. Specialist, get me orbit verification and prepare the thruster calibration.

Controlled Flight

Excellent work specialist we are now in controlled flight. The front end and back end of the website is in the cloud, it has a CI/CD pipeline for both, and the website is live. Setting out to complete this challenge was one of the best things I’ve ever done. It pushed me to expand my knowledge in a lot of ways as well as apply my newfound AWS knowledge. The total list of tools and services being used for this project is not short. There was a lot of research, late nights, banging my head against the keyboard, and lots of fist pumps of joy when there was success. How I feel reminds me of a scene from the West Wing (one of the greatest TV shows of all time, watch it if you haven’t). One of the main characters, Josh Lyman, emerges from his office and proclaims, “victory is mine, victory is mine, great day in the morning people, victory is mine. I drink from the keg of glory…”.

To keep this blog post from endlessly going on, I welcome you to continue the conversation with me on LinkedIn. Conveniently you can click the message me on LinkedIn button to do so!

Finally, a few shout outs: to Forrest Brazeal for creating this challenge; to Adrian Cantrill for creating some fantastic AWS certification courses; to Ezra Hodge for pointing me in the direction of AWS and suggesting cloud architecture as a career path. And last but not least to my wife Ally for understanding that sometimes I just had to stay up past 2am to figure out the problem I was working on, as well as the endless support.